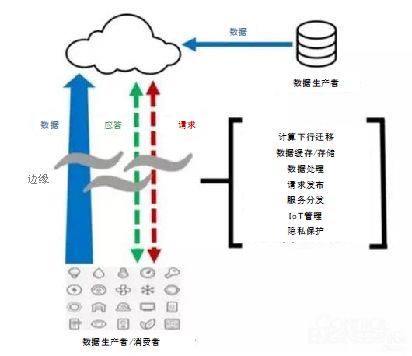

The popularity of artificial intelligence has also brought many new terms, such as "machine learning". Different from the traditional way of making machines execute instructions according to a given program, the core of machine learning based on artificial intelligence neural network (ANN) is to allow machines to automatically learn and improve their operation or function without human input and intervention. , which makes the machine appear to have the ability to learn and evolve by itself, making it more "intelligent". This is especially critical for making judgments and decisions in complex and unpredictable situations, such as allowing vehicles to respond correctly to road conditions in a driverless state. The rise of machine learning The mode of letting machines "learn" is very similar to the process of learning and cognition in the human brain. First, the ANN needs to be "trained" with a set of data, so that the ANN has a certain type of "knowledge"; after that, the machine can draw knowledge and experience from the past training, and when a new situation emerges, based on the well-trained ANN Carry out "reasoning" and make accurate judgments. In fact, machine learning is not a new concept. The reason why it has become popular in the past two years has its own reasons. From the perspective of the three elements of artificial intelligence ABC (A: algorithm; B: big data; C: computing power), although the algorithm is very "net red", its breakthrough progress is limited in essence, and many algorithms currently used are only the The results that have been dusty for many years are only used to change their faces, and the development of the latter two is the decisive role in the rise of machine learning. In the past 20 years, with the development of digitization and the Internet, human beings have accumulated a large amount of data, and the rise and application of the concept of big data in the past few years has laid a good foundation for machine learning, allowing people to obtain a large amount of data that can be used for machine learning. "training" data. In terms of computing power, continuously improving processor technology provides a solid foundation for machine learning, which can withstand the test of more complex and deeper ANNs. For example, for the "training" stage of large consumers of computing resources, people have developed a variety of computing platforms such as GPU, FPGA, TPU, and heterogeneous processors to meet computing power challenges. In addition to the improvement of the underlying hardware processor platform, the improvement of computing power required for machine learning is an important factor that cannot be ignored, which is the development of cloud computing. The centralized big data processing model with cloud computing as the core has concentrated the previously scattered computing resources to the cloud, and made possible computing tasks that were previously impossible. Therefore, cloud computing has also become an important basic supporting technology for the development of artificial intelligence. Figure 1. Machine learning has spawned many innovative artificial intelligence applications, such as autonomous driving (Image source: Internet) From cloud to edge But today, the centralized cloud computing model has also encountered challenges. Everyone gradually discovered that a large number of artificial intelligence applications and machine learning scenarios occur at the edge nodes of the network closer to users. If everything has to be uploaded to the cloud for analysis and judgment, and then the instructions are sent to the edge nodes for processing, so "Long" data communication links will be limited by the network transmission bandwidth, resulting in unacceptable delays, as well as challenges such as security and power consumption. This bottleneck of cloud computing will inevitably prompt people to reconsider the rational allocation of computing resources and provide a more reasonable and efficient computing architecture, so "edge computing" came into being. Different from centralized cloud computing, what edge computing needs to do is not to transmit data to the remote cloud for processing, but to solve it locally at the edge, allowing embedded devices at the edge to complete real-time data analysis and analysis. Intelligent processing. This actually follows a strategy of "putting the right data at the right place for processing at the right time", and the resulting "cloud + edge" hybrid computing model will have a profound impact on the future IoT architecture. Specific to the artificial intelligence application scenarios based on machine learning, when deploying its computing system, the above-mentioned development trend of "from cloud computing to edge computing" will naturally be taken into account, and computing resources should be allocated reasonably to maximize the efficiency of data processing. In this regard, the general consensus at present is: make full use of cloud computing power, complete the "training" work of ANN in the cloud, and put "inference" in the network edge node equipment to form a complete and efficient machine learning system . This helps reduce the risk of network congestion, improve real-time processing, enhance user privacy protection, and enable "inference" even when the Internet is not available. For example, for a face recognition system, an ANN that has been "trained" in the cloud can be deployed to the local client. When the camera captures the face information, it will use the processor of the local embedded device to complete the "inference". Work, compare it with the face information database stored in the local database, and complete the work of face recognition. This is a classic example of "training in the cloud, inference at the edge". Figure 2, Edge computing architecture (Image source: Network) Machine Learning on Embedded Systems Of course, although the above "cloud integration" computing architecture looks beautiful, there are still many problems in the implementation process. One of the most direct problems is that embedded devices working at the edge of the network are usually in a resource-constrained state. Constraints in power consumption, cost, and form factor make it impossible to have the "luxury" of cloud systems. computing power. Therefore, in order to achieve "running machine learning on embedded systems", it is necessary to make special arrangements from hardware design to software deployment. But from another point of view, this just brings new opportunities to embedded system design, making it at the "edge" of the network, but it has actually become the "center" of everyone's attention. In terms of embedded machine learning, the entire industry chain has been actively taking action. For example, as an embedded processor technology supplier in the upstream of the industry chain, ARM has announced the launch of machine learning processors and neural network machine learning software ARM NN. ARM NN can bridge between existing neural network frameworks (such as TensorFlow or Caffe) and the underlying hardware processors running on embedded Linux platforms, allowing developers to easily integrate machine learning frameworks and tools seamlessly under the hood. run on embedded platforms. ARM NN software from ARM bridges the neural network framework needed for machine learning and embedded hardware (Image credit: ARM) The above-mentioned attempts originating from the two poles of the industry chain will eventually "shake hands" at certain points of convergence and form a joint force, becoming the promoter of embedded machine learning. At that time, the "fire" of embedded machine learning must have risen to a higher temperature. Light Wire Harness,Light Bar Wiring Harness,Led Light Bar Wiring Harness,Led Light Wiring Harness Dongguan YAC Electric Co,. LTD. , https://www.yacentercn.com